Knowledge Base Copy objects between S3 buckets and AWS regions using replication

Having trouble conquering cross region replication with S3? Let us help.

Amazon Simple Storage Service (S3) replication allows you to automatically and asynchronously copy objects between buckets within the same or different AWS accounts.

There are two types; Cross-Region Replication and Same-Region Replication.

Cross-Region Replication (CRR) was launched in 2015 and can be useful for at least a couple of reasons. It can help you comply with industry regulations that require you to store copies of data on a global scale, and it can reduce latency by bringing data closer to users or analytical workloads that you have around the world.

Launched just last year in 2019, Same-Region Replication (SRR) is ideal for aggregating data from multiple buckets, such as moving log files into a single bucket for processing.

Additionally, when applied to two AWS accounts, SRR can help you synchronise your production and test environments, or maintain copies of data in a separate AWS account when regulations prevent you from transferring to another AWS region.

Setting up replication between two S3 buckets

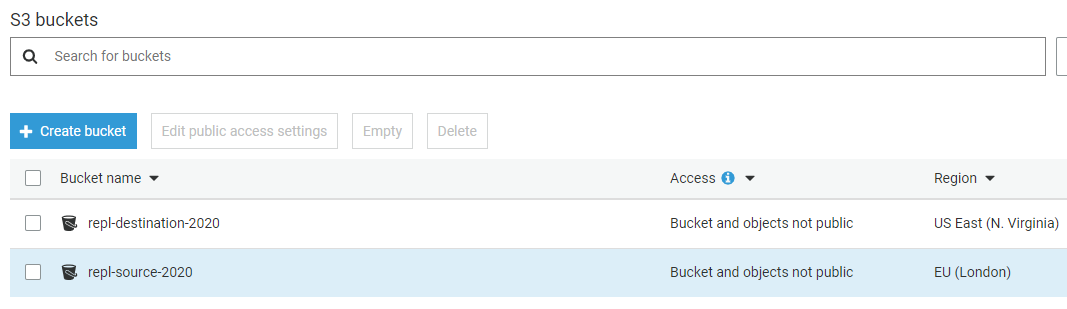

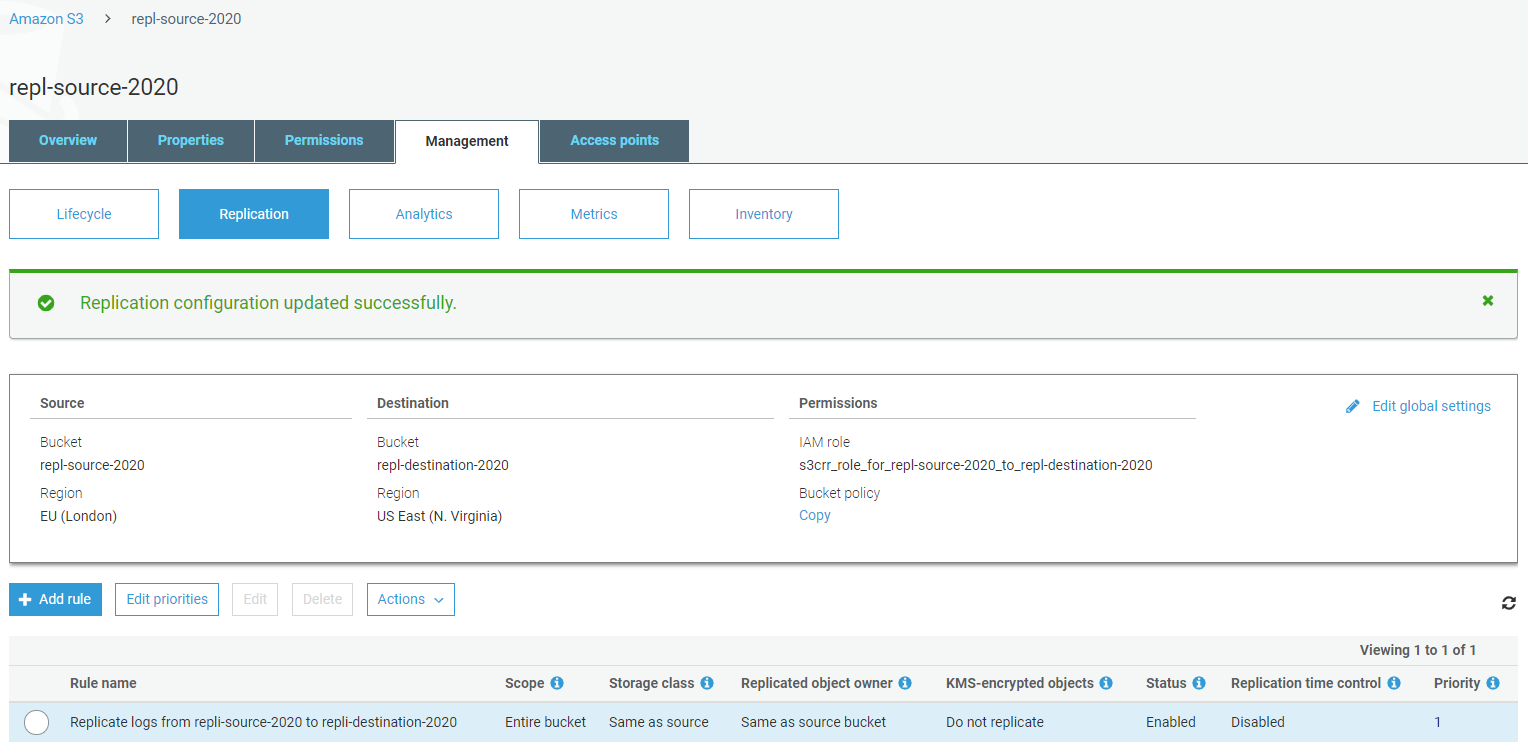

Consider this scenario: we want to set up replication between two buckets, with the source in EU (London) and the destination in US East (N. Virginia). This is a cross-region example although the set up for SRR is the same.

In the AWS Management Console, we can see our two buckets:

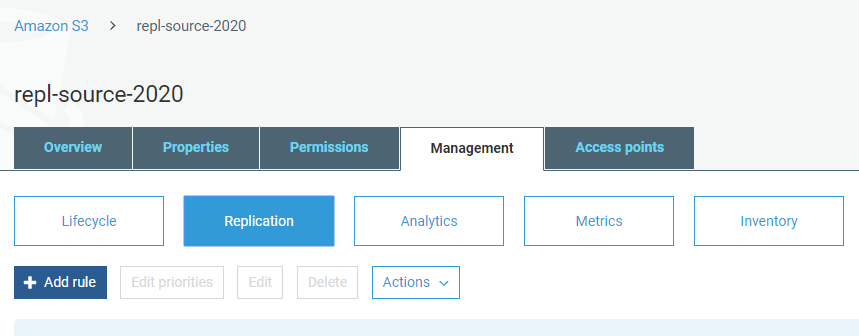

Select the source bucket and go to the Management > Replication tab.

Click on the Add rule button.

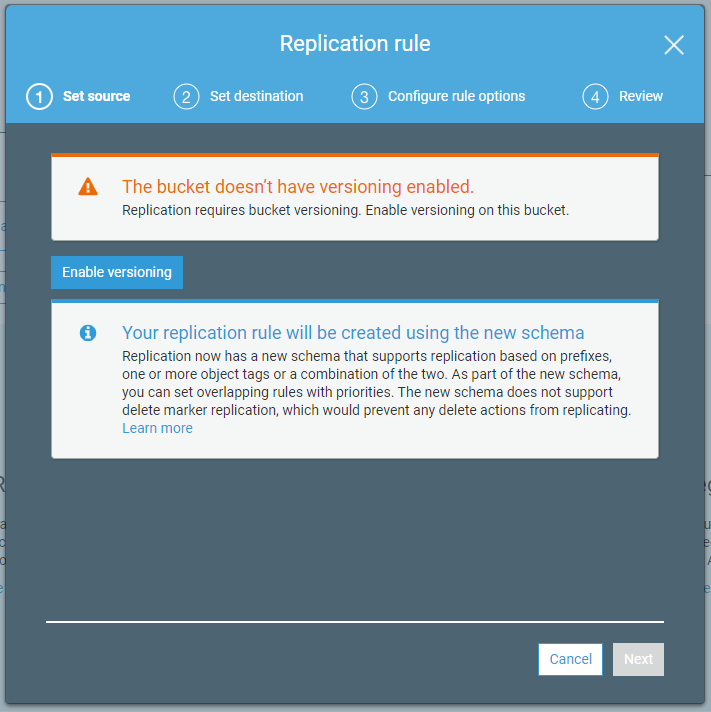

Replication requires versioning to be enabled on both the source and destination buckets, so you’ll need to enable it before going any further.

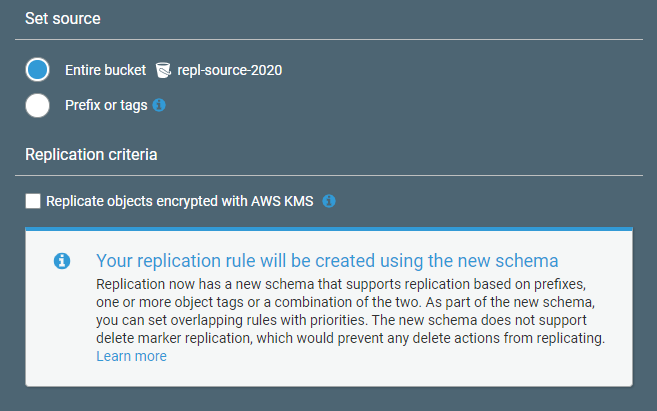

You can choose to replicate all objects (including encrypted ones if required) or just those objects with a particular prefix or tag.

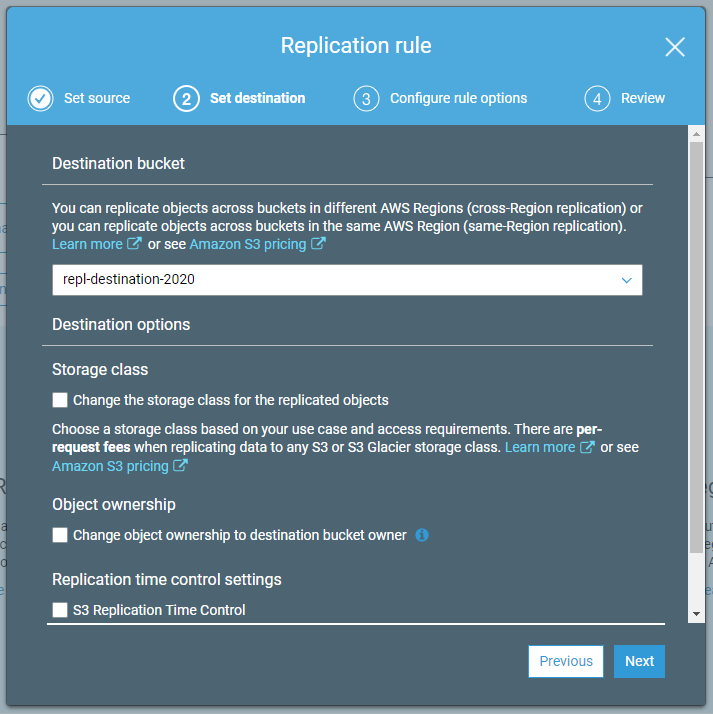

Select the destination bucket and enable versioning on it if you haven’t already. Note the Storage class and Replication time control settings too.

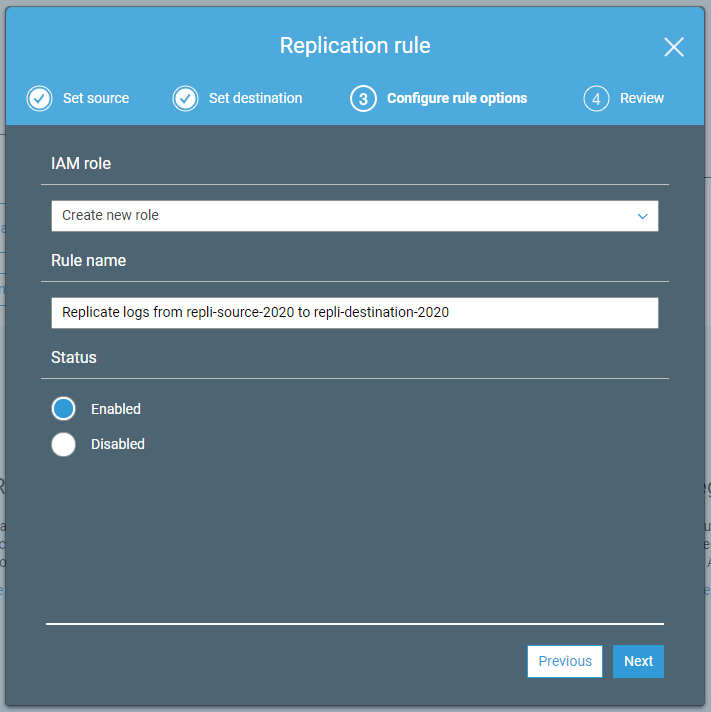

For now, create a new IAM role, and enter a rule name.

Verify the configuration settings on the final screen and save the rule. Your rule should now be enabled and listed on the Replication page.

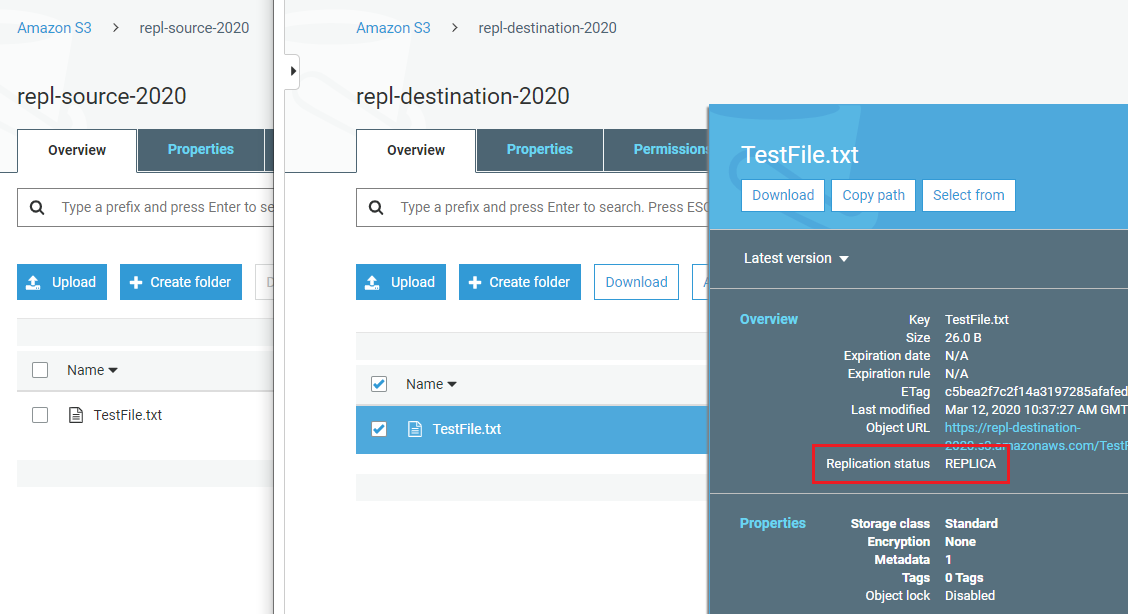

With the new rule in place, you should find that any new or updated objects uploaded to the source bucket are replicated to the destination bucket after a few seconds.

In the screenshot below, note the replication status of REPLICA for the replicated object in the destination bucket.

S3 Replication Time Control

S3 Replication Time Control is an optional feature designed to ensure that most objects are replicated within seconds and at least 99.99% within 15 minutes. It’s backed by a Service Level Agreement (SLA) and can be helpful if your business requirements or industry regulations require more confidence than just “most within seconds”.

IAM roles for SRR and CRR

During the set up process, we allowed AWS to create a new IAM role for the replication. In practice, you may wish to create your own role and use it for replication rules covering several buckets. You can do this using the following construct, replacing the example Amazon Resource Names (ARNs) with your own source and destination buckets.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": [

"s3:Get*",

"s3:ListBucket"

],

"Effect": "Allow",

"Resource": [

"arn:aws:s3:::repl-source-2020",

"arn:aws:s3:::repl-source-2020/*"

]

},

{

"Action": [

"s3:ReplicateObject",

"s3:ReplicateDelete",

"s3:ReplicateTags",

"s3:GetObjectVersionTagging"

],

"Effect": "Allow",

"Resource": "arn:aws:s3:::repl-destination-2020/*"

}

]

}Useful tips

AWS Consultancy Whether you’re planning to migrate to AWS for the first time, or want to optimise your existing use of AWS, we’re ready to help you. Find Out More

AWS Consultancy Whether you’re planning to migrate to AWS for the first time, or want to optimise your existing use of AWS, we’re ready to help you. Find Out More

- Programmatic replication - in addition to the AWS Management Console, you can create and update replication rules using the AWS Command Line Interface (CLI) and AWS SDK

- Versioning - as above, it needs to be enabled on both buckets; also remember that once enabled, it can be suspended but not removed

- Storage classes - within a replication rule, you can specify a different storage class for replicated objects; you can also complement this with a separate lifecycle rule in your destination bucket

- Existing objects - existing objects in the source bucket are not replicated; use a COPY command via the CLI to get the destination bucket in sync before enabling your replication rule

- Deleted objects - object deletions (or more specifically, delete markers) in the source bucket are not replicated; this is designed to protect data from accidental or malicious deletions