Knowledge Base Transition S3 objects between storage classes using lifecycle rules

Our AWS consultants provide the low-down on transitioning S3 objects to different storage classes.

Amazon Simple Storage Service (S3) offers a range of storage classes which are modelled and priced around factors such as how often and how quickly you need to access objects.

With the AWS Well Architected Framework in mind - specifically the Cost Optimisation pillar - you might consider storage classes before you upload objects to S3. In reality, you are much more likely to do this after receiving your first monthly AWS bill.

S3 costs can be ridiculously low but they can also add up at scale. For instance, the monthly cost of storing 100 TB can vary between $2300 (US East N. Virginia - S3 Standard) and $100 (US East N. Virginia - S3 Glacier Deep Archive).

For this reason, it pays to select and use the storage classes that are most aligned to your business requirements.

| Storage class | Designed for | Designed for durability | Designed for availability | Availability SLA | Availability Zones | First byte latency | Retrieval fee |

|---|---|---|---|---|---|---|---|

| Standard | Frequently accessed data | 99.999999999% (11 9s) | 99.99% | 99.9% | ≥3 | ms | n/a |

| Intelligent Tiering | Long-lived data with changing or unknown access patterns | 99.999999999% (11 9s) | 99.9% | 99% | ≥3 | ms | n/a |

| Standard-IA | Long-lived, infrequently accessed data | 99.999999999% (11 9s) | 99.9% | 99% | ≥3 | ms | Per GB retrieved |

| One Zone-IA | Long-lived, infrequently accessed, non-critical data | 99.999999999% (11 9s) | 99.5% | 99% | 1 | ms | Per GB retrieved |

| Glacier | Archive data with retrieval times ranging from minutes to hours | 99.999999999% (11 9s) | 99.99% | 99.9% | ≥3 | minutes or hours | Per GB retrieved |

| Glacier Deep Archive | Archive data that rarely, if ever, needs to be accessed with retrieval times in hours | 99.999999999% (11 9s) | 99.99% | 99.9% | ≥3 | hours | per GB retrieved |

You can manually select the storage class for one or more objects during and after upload, or you can do it automatically using lifecycle rules which transition objects between storage classes based on your criteria.

AWS Consultancy Whether you’re planning to migrate to AWS for the first time, or want to optimise your existing use of AWS, we’re ready to help you. Find Out More

AWS Consultancy Whether you’re planning to migrate to AWS for the first time, or want to optimise your existing use of AWS, we’re ready to help you. Find Out More

Lifecycle rules can:

- Apply to all objects in a bucket, or just those with matching prefixes and / or tags

- Target current and previous versions of objects

- Expire current and previous versions based on age

- Clean up delete markers and incomplete multipart uploads

They are simple to set up using the AWS Management Console, and can also be created and managed using the AWS CLI and SDKs.

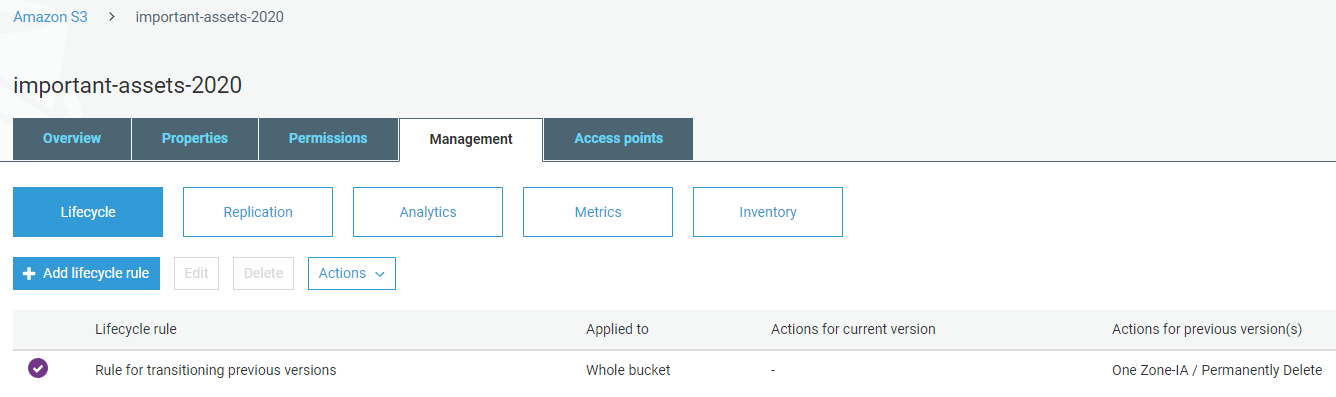

To demonstrate how simple, let’s say we want to create a lifecycle rule that transitions previous versions of objects to the One Zone-IA storage class at 30 days old, and then permanently deletes them at 60 days old.

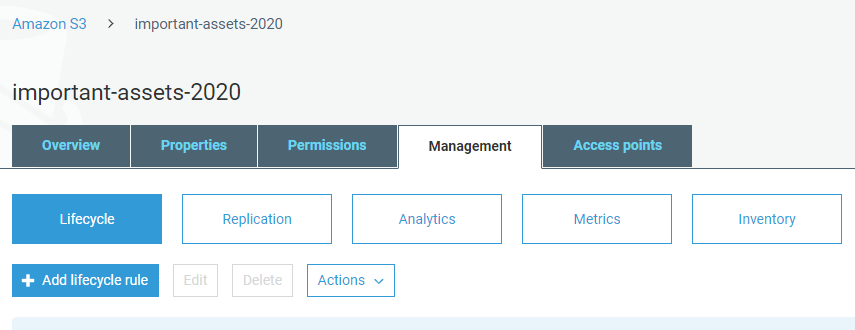

To begin, select the bucket and go to the Management > Lifecyle tab.

Click on the Add lifecycle rule button.

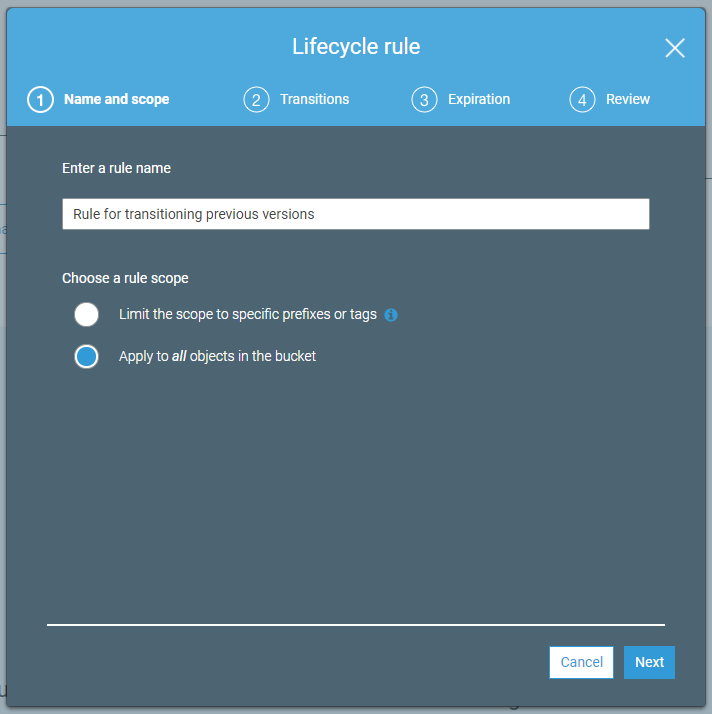

Enter a rule name and choose the scope; you can apply the rule to all objects or just those with a particular prefix or tag.

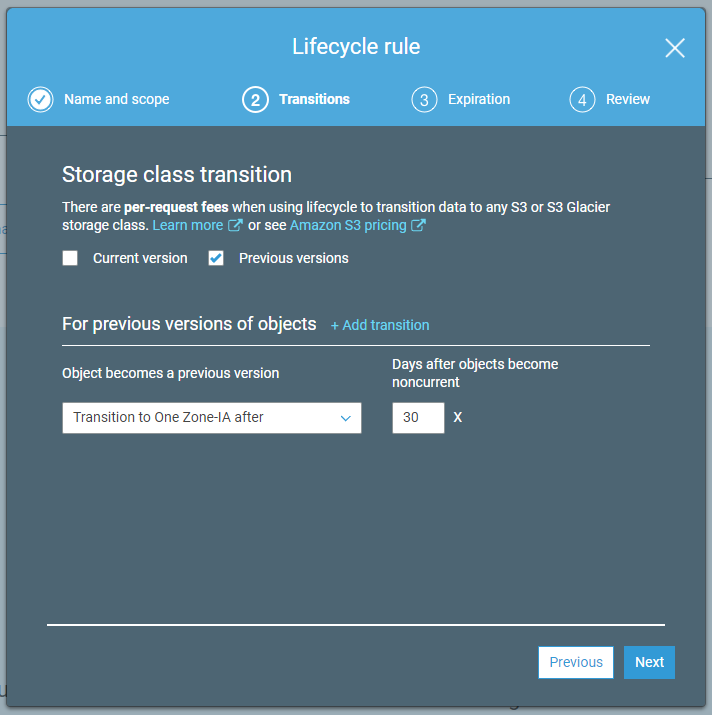

Configure the settings so that previous versions are transitioned to One Zone-IA after 30 days. Note the ability to add multiple transitions within a single rule.

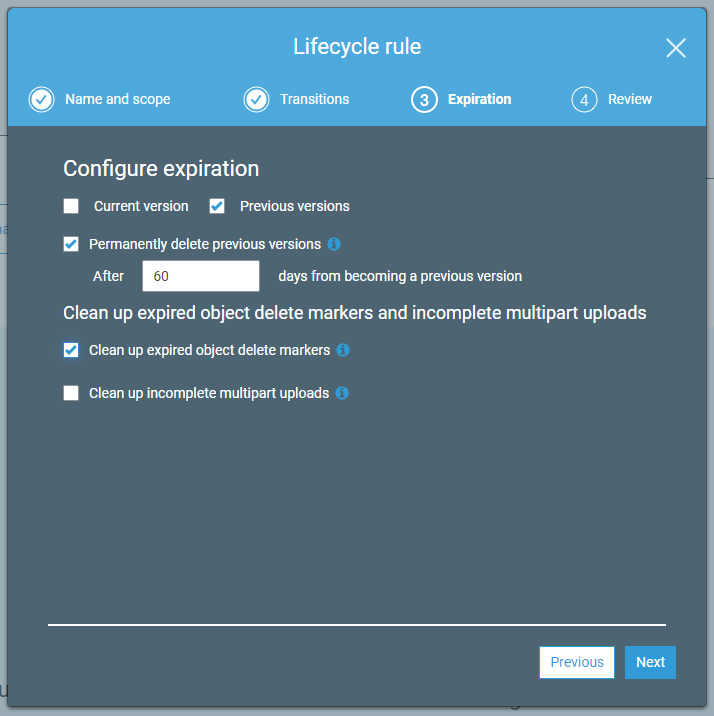

You can expire current and previous versions of objects in versioning-enabled buckets. For current versions, expiration marks the current version as a previous version and places a delete marker as the current version. For previous versions, expiration permanently deletes the objects.

Choose the settings to permanently delete previous versions after 60 days, and to clean up expired object delete markers; this will remove delete markers for an object where all its previous versions have been expired.

Verify the configuration settings on the final screen and save the rule. Your rule should now be enabled and listed on the Lifecycle page.